WBIC/BIC¶

This notebook gives a tutorial on how to use Watanabe-Bayesian information criterion (WBIC) and Bayesian information criterion (BIC) for feature selection (Watanabe[2010], McElreath[2015], and Vehtari[2016]). The WBIC or BIC is an information criterion. Similar to other criteria (AIC, DIC), the WBIC/BIC endeavors to find the most parsimonious model, i.e., the model that balances fit with complexity. In other words a model (or set of features) that optimizes WBIC/BIC should neither over nor under fit the available data.

In this tutorial a data set is simulated using the damped linear trend (DLT) model. This data set is then used to fit DLT models with varying number of features as well as a global local trend model (GLT), and a Error-Trend-Seasonal (ETS) model. The WBIC/BIC criteria is then show to find the true model.

Note that we recommend the use of WBIC for full Bayesian and SVI estimators and BIC for MAP estimator.

[1]:

from datetime import timedelta

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import orbit

from orbit.models import DLT,ETS, KTRLite, LGT

from orbit.utils.simulation import make_trend, make_regression

[2]:

print(orbit.__version__)

1.1.3dev

Data Simulation¶

This block of code creates random data set (365 observations with 10 features) assuming a DLT model. Of the 10 features 5 are effective regressors; i.e., they are used in the true model to create the data.

As an exercise left to the user once you have run the code once try changing the NUM_OF_EFFECTIVE_REGRESSORS (line 2), the SERIES_LEN (line 3), and the SEED (line 4) to see how it effects the results.

[3]:

NUM_OF_REGRESSORS = 10

NUM_OF_EFFECTIVE_REGRESSORS = 4

SERIES_LEN = 365

SEED = 1

# sample some coefficients

COEFS = np.random.default_rng(SEED).uniform(-1, 1, NUM_OF_EFFECTIVE_REGRESSORS)

trend = make_trend(SERIES_LEN, rw_loc=0.01, rw_scale=0.1)

x, regression, coefs = make_regression(series_len=SERIES_LEN, coefs=COEFS)

# combine trend and the regression

y = trend + regression

y = y - y.min()

x_extra = np.random.normal(0, 1, (SERIES_LEN, NUM_OF_REGRESSORS - NUM_OF_EFFECTIVE_REGRESSORS))

x = np.concatenate([x, x_extra], axis=-1)

x_cols = [f"x{x}" for x in range(1, NUM_OF_REGRESSORS + 1)]

response_col = "y"

dt_col = "date"

obs_matrix = np.concatenate([y.reshape(-1, 1), x], axis=1)

# make a data frame for orbit inputs

df = pd.DataFrame(obs_matrix, columns=[response_col] + x_cols)

# make some dummy date stamp

dt = pd.date_range(start='2016-01-04', periods=SERIES_LEN, freq="1W")

df['date'] = dt

[4]:

print(df.shape)

print(df.head())

(365, 12)

y x1 x2 x3 x4 x5 x6 \

0 4.426242 0.172792 0.000000 0.165219 -0.000000 -0.171564 0.646560

1 5.580432 0.452678 0.223187 -0.000000 0.290559 1.760342 1.809456

2 5.031773 0.182286 0.147066 0.014211 0.273356 -1.536165 -1.029521

3 3.264027 -0.368227 -0.081455 -0.241060 0.299423 0.591493 0.505090

4 5.246511 0.019861 -0.146228 -0.390954 -0.128596 -1.447045 -1.678974

x7 x8 x9 x10 date

0 -0.140274 2.024782 -0.334100 -1.179794 2016-01-10

1 -1.191844 -0.990802 -2.894241 1.757803 2016-01-17

2 0.572564 -0.750318 0.168999 0.524423 2016-01-24

3 1.348104 0.464408 1.263463 -1.705559 2016-01-31

4 0.292021 0.534810 1.463977 -0.137525 2016-02-07

WBIC¶

In this section, we use DLT model as an example. Different DLT models (the number of features used changes) are fitted and their WBIC values are calculated respectively.

[5]:

%%time

WBIC_ls = []

for k in range(1, NUM_OF_REGRESSORS + 1):

regressor_col = x_cols[:k]

dlt_mod = DLT(

response_col=response_col,

date_col=dt_col,

regressor_col=regressor_col,

seed=2022,

# fixing the smoothing parameters to learn regression coefficients more effectively

level_sm_input=0.01,

slope_sm_input=0.01,

num_warmup=4000,

num_sample=4000,

)

WBIC_temp = dlt_mod.fit_wbic(df=df)

print("WBIC value with {:d} regressors: {:.3f}".format(k, WBIC_temp))

print('------------------------------------------------------------------')

WBIC_ls.append(WBIC_temp)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 1 regressors: 1202.366

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 2 regressors: 1150.291

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 3 regressors: 1104.491

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 4 regressors: 1054.450

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 5 regressors: 1060.473

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 6 regressors: 1066.991

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 7 regressors: 1073.808

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 8 regressors: 1080.625

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 1000 and samples(per chain): 1000.

WBIC value with 9 regressors: 1084.251

------------------------------------------------------------------

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

WBIC value with 10 regressors: 1088.111

------------------------------------------------------------------

CPU times: user 3.12 s, sys: 4.08 s, total: 7.2 s

Wall time: 4min 37s

It is also interesting to see if WBIC can distinguish between model types; not just do feature selection for a given type of model. To that end the next block fits an LGT and ETS model to the data; the WBIC values for both models are then calculated.

Note that WBIC is supported for both the ‘stan-mcmc’ and ‘pyro-svi’ estimators. Currently only the LGT model has both. Thus WBIC is calculated for LGT for both estimators.

[6]:

%%time

lgt = LGT(response_col=response_col,

date_col=dt_col,

regressor_col=regressor_col,

seasonality=52,

estimator='stan-mcmc',

seed=8888)

WBIC_lgt_mcmc = lgt.fit_wbic(df=df)

print("WBIC value for LGT model (stan MCMC): {:.3f}".format(WBIC_lgt_mcmc))

lgt = LGT(response_col=response_col,

date_col=dt_col,

regressor_col=regressor_col,

seasonality=52,

estimator='pyro-svi',

seed=8888)

WBIC_lgt_pyro = lgt.fit_wbic(df=df)

print("WBIC value for LGT model (pyro SVI): {:.3f}".format(WBIC_lgt_pyro))

ets = ETS(

response_col=response_col,

date_col=dt_col,

seed=2020,

# fixing the smoothing parameters to learn regression coefficients more effectively

level_sm_input=0.01,

)

WBIC_ets = ets.fit_wbic(df=df)

print("WBIC value for ETS model: {:.3f}".format(WBIC_ets))

WBIC_ls.append(WBIC_lgt_mcmc)

WBIC_ls.append(WBIC_lgt_pyro)

WBIC_ls.append(WBIC_ets)

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 225 and samples(per chain): 25.

WARNING:pystan:Maximum (flat) parameter count (1000) exceeded: skipping diagnostic tests for n_eff and Rhat.

To run all diagnostics call pystan.check_hmc_diagnostics(fit)

INFO:orbit:Using SVI (Pyro) with steps: 301, samples: 100, learning rate: 0.1, learning_rate_total_decay: 1.0 and particles: 100.

INFO:root:Guessed max_plate_nesting = 2

WBIC value for LGT model (stan MCMC): 1144.606

INFO:orbit:step 0 loss = 303.3, scale = 0.11508

INFO:orbit:step 100 loss = 116.73, scale = 0.50351

INFO:orbit:step 200 loss = 116.58, scale = 0.5112

INFO:orbit:step 300 loss = 116.84, scale = 0.50453

INFO:orbit:Sampling (PyStan) with chains: 4, cores: 8, temperature: 5.900, warmups (per chain): 225 and samples(per chain): 25.

WBIC value for LGT model (pyro SVI): 1124.428

WARNING:pystan:n_eff / iter below 0.001 indicates that the effective sample size has likely been overestimated

WARNING:pystan:Rhat above 1.1 or below 0.9 indicates that the chains very likely have not mixed

WBIC value for ETS model: 1203.031

CPU times: user 50.9 s, sys: 8.06 s, total: 59 s

Wall time: 57.9 s

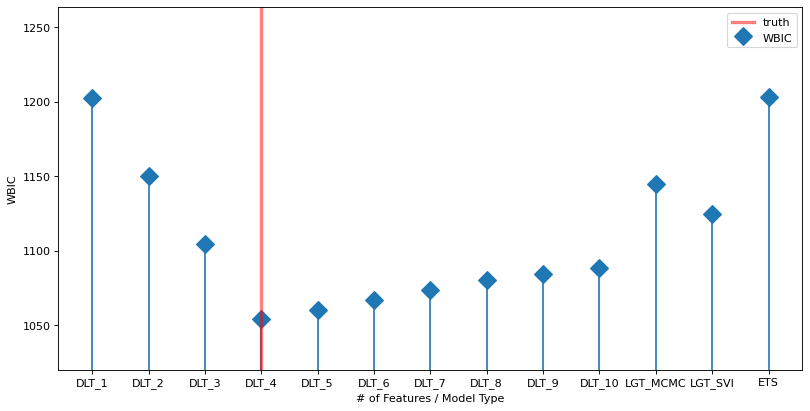

The plot below shows the WBIC vs the number of features / model type (blue line). The true model is indicated by the vertical red line. The horizontal gray line shows the minimum (optimal) value. The minimum is at the true value.

[7]:

labels = ["DLT_{}".format(x) for x in range(1, NUM_OF_REGRESSORS + 1)] + ['LGT_MCMC', 'LGT_SVI','ETS']

fig, ax = plt.subplots(1, 1,figsize=(12, 6), dpi=80)

markerline, stemlines, baseline = ax.stem(

np.arange(len(labels)), np.array(WBIC_ls), label='WBIC', markerfmt='D')

baseline.set_color('none')

markerline.set_markersize(12)

ax.set_ylim(1020, )

ax.set_xticks(np.arange(len(labels)))

ax.set_xticklabels(labels)

# because list type is mixed index from 1;

ax.axvline(x=NUM_OF_EFFECTIVE_REGRESSORS - 1, color='red', linewidth=3, alpha=0.5, linestyle='-', label='truth')

ax.set_ylabel("WBIC")

ax.set_xlabel("# of Features / Model Type")

ax.legend();

BIC¶

In this section, we use DLT model as an example. Different DLT models (the number of features used changes) are fitted and their BIC values are calculated respectively.

[8]:

%%time

BIC_ls = []

for k in range(0, NUM_OF_REGRESSORS):

regressor_col = x_cols[:k + 1]

dlt_mod = DLT(

estimator='stan-map',

response_col=response_col,

date_col=dt_col,

regressor_col=regressor_col,

seed=2022,

# fixing the smoothing parameters to learn regression coefficients more effectively

level_sm_input=0.01,

slope_sm_input=0.01,

)

dlt_mod.fit(df=df)

BIC_temp = dlt_mod.get_bic()

print("BIC value with {:d} regressors: {:.3f}".format(k + 1, BIC_temp))

print('------------------------------------------------------------------')

BIC_ls.append(BIC_temp)

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

BIC value with 1 regressors: 1247.445

------------------------------------------------------------------

BIC value with 2 regressors: 1191.889

------------------------------------------------------------------

BIC value with 3 regressors: 1139.408

------------------------------------------------------------------

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

BIC value with 4 regressors: 1079.639

------------------------------------------------------------------

BIC value with 5 regressors: 1082.445

------------------------------------------------------------------

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

BIC value with 6 regressors: 1082.482

------------------------------------------------------------------

BIC value with 7 regressors: 1082.217

------------------------------------------------------------------

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

INFO:orbit:Optimizing (PyStan) with algorithm: LBFGS.

BIC value with 8 regressors: 1081.698

------------------------------------------------------------------

BIC value with 9 regressors: 1078.876

------------------------------------------------------------------

BIC value with 10 regressors: 1187.269

------------------------------------------------------------------

CPU times: user 935 ms, sys: 58.7 ms, total: 994 ms

Wall time: 995 ms

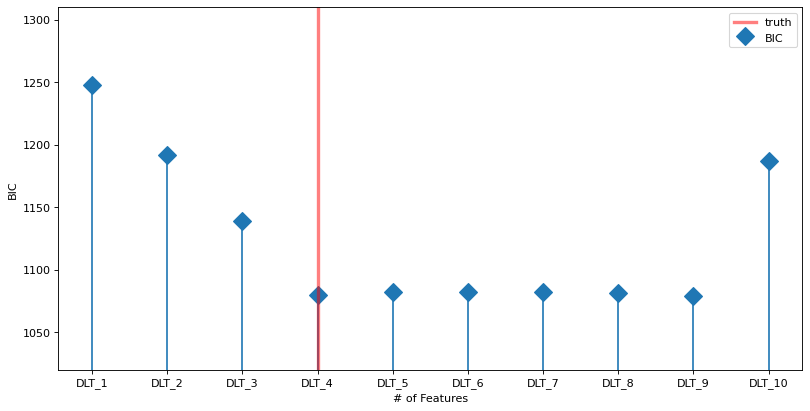

The plot below shows the BIC vs the number of features (blue line). The true model is indicated by the vertical red line. The horizontal gray line shows the minimum (optimal) value. The minimum is at the true value.

[9]:

labels = ["DLT_{}".format(x) for x in range(1, NUM_OF_REGRESSORS + 1)]

fig, ax = plt.subplots(1, 1,figsize=(12, 6), dpi=80)

markerline, stemlines, baseline = ax.stem(

np.arange(len(labels)), np.array(BIC_ls), label='BIC', markerfmt='D')

baseline.set_color('none')

markerline.set_markersize(12)

ax.set_ylim(1020, )

ax.set_xticks(np.arange(len(labels)))

ax.set_xticklabels(labels)

# because list type is mixed index from 1;

ax.axvline(x=NUM_OF_EFFECTIVE_REGRESSORS - 1, color='red', linewidth=3, alpha=0.5, linestyle='-', label='truth')

ax.set_ylabel("BIC")

ax.set_xlabel("# of Features")

ax.legend();

References¶

Watanabe Sumio (2010). “Asymptotic Equivalence of Bayes Cross Validation and Widely Applicable Information Criterion in Singular Learning Theory”. Journal of Machine Learning Research. 11: 3571–3594.

McElreath Richard (2015). “Statistical Rethinking: A Bayesian course with examples in R and Stan” Secound Ed. 193-221.

Vehtari Aki, Gelman Andrew, Gabry Jonah (2016) “Practical Bayesian model evaluation using leave-one-out cross-validation and WAIC”