Using Pyro for Estimation¶

Note

Currently we are still experimenting with Pyro and support Pyro only in LGT and KTR models.

Pyro is a flexible, scalable deep probabilistic programming library built on PyTorch. Pyro was originally developed at Uber AI and is now actively maintained by community contributors, including a dedicated team at the Broad Institute.

[1]:

%matplotlib inline

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import orbit

from orbit.models import LGT

from orbit.diagnostics.plot import plot_predicted_data

from orbit.diagnostics.plot import plot_predicted_components

from orbit.utils.dataset import load_iclaims

from orbit.constants.palette import OrbitPalette

/Users/towinazure/opt/miniconda3/envs/orbit39/lib/python3.9/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

[2]:

print(orbit.__version__)

1.1.4.3

[3]:

df = load_iclaims()

/Users/towinazure/edwinnglabs/orbit/orbit/utils/dataset.py:27: UserWarning: Could not infer format, so each element will be parsed individually, falling back to `dateutil`. To ensure parsing is consistent and as-expected, please specify a format.

df = pd.read_csv(url, parse_dates=["week"])

[4]:

test_size=52

train_df=df[:-test_size]

test_df=df[-test_size:]

VI Fit and Predict¶

Although Pyro provides a variety of ways to optimize/sample posteriors. Currently, we only support Stochastic Variational Inference (SVI). For details, please refer to this doc.

To use SVI for LGT, specify estimator as pyro-svi.

[5]:

lgt_vi = LGT(

response_col='claims',

date_col='week',

seasonality=52,

seed=8888,

estimator='pyro-svi',

num_steps=101,

num_sample=300,

# trigger message per 50 steps

message=50,

learning_rate=0.1,

)

[6]:

%%time

lgt_vi.fit(df=train_df)

2024-01-07 17:06:45 - orbit - INFO - Using SVI (Pyro) with steps: 101, samples: 300, learning rate: 0.1, learning_rate_total_decay: 1.0 and particles: 100.

/Users/towinazure/opt/miniconda3/envs/orbit39/lib/python3.9/site-packages/torch/__init__.py:614: UserWarning: torch.set_default_tensor_type() is deprecated as of PyTorch 2.1, please use torch.set_default_dtype() and torch.set_default_device() as alternatives. (Triggered internally at /Users/runner/work/pytorch/pytorch/pytorch/torch/csrc/tensor/python_tensor.cpp:453.)

_C._set_default_tensor_type(t)

2024-01-07 17:06:46 - orbit - INFO - step 0 loss = 658.91, scale = 0.11635

INFO:orbit:step 0 loss = 658.91, scale = 0.11635

2024-01-07 17:06:49 - orbit - INFO - step 50 loss = -432, scale = 0.48623

INFO:orbit:step 50 loss = -432, scale = 0.48623

2024-01-07 17:06:52 - orbit - INFO - step 100 loss = -444.07, scale = 0.34976

INFO:orbit:step 100 loss = -444.07, scale = 0.34976

CPU times: user 6.02 s, sys: 611 ms, total: 6.63 s

Wall time: 6.18 s

[6]:

<orbit.forecaster.svi.SVIForecaster at 0x177b14850>

[7]:

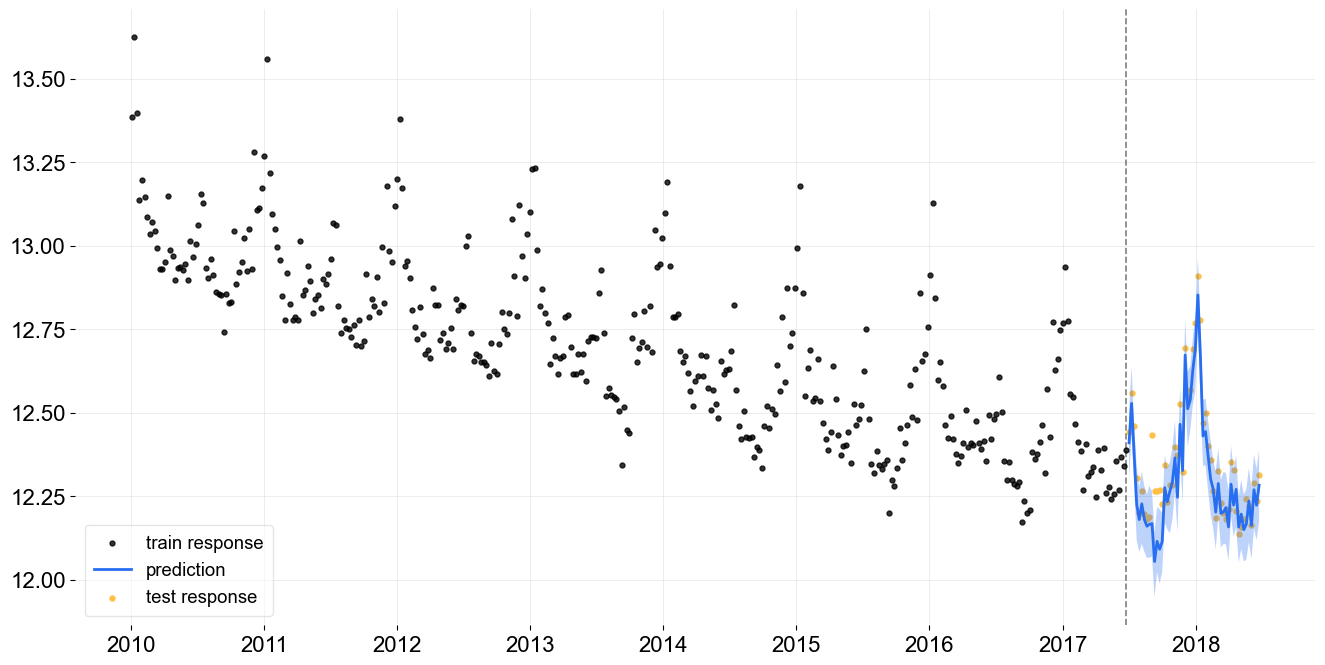

predicted_df = lgt_vi.predict(df=test_df)

[8]:

_ = plot_predicted_data(training_actual_df=train_df, predicted_df=predicted_df,

date_col=lgt_vi.date_col, actual_col=lgt_vi.response_col,

test_actual_df=test_df)

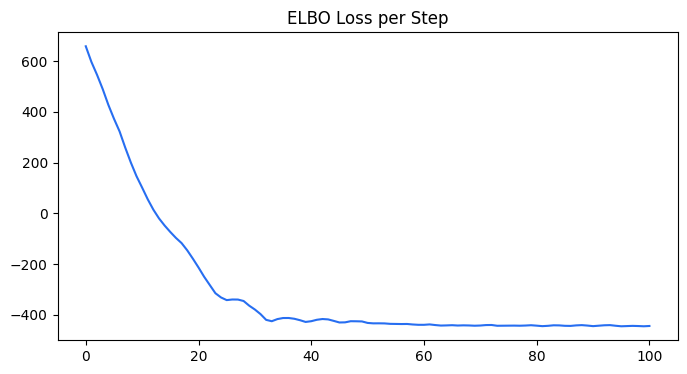

We can also extract the ELBO loss from the training metrics.

[9]:

loss_elbo = lgt_vi.get_training_metrics()['loss_elbo']

[10]:

steps = np.arange(len(loss_elbo))

plt.subplots(1, 1, figsize=(8, 4))

plt.plot(steps, loss_elbo, color=OrbitPalette.BLUE.value)

plt.title('ELBO Loss per Step')

[10]:

Text(0.5, 1.0, 'ELBO Loss per Step')